topics in applied religion, 5th edition, revised

12/30/2025

pseudo-historical speculation here

Religions instituting prayer requirements were secretly the justification for people to allow themselves to experience positive psychological effects of self-reflection. It is hard to convince oneself to take time out of their day to journal and “be mindful” if one has to constantly farm and struggle to survive. However, if God explicitly requires you to do it then you get free motivation.

Later in modern secular society, the drop in scheduled prayer has been accompanied by people compensating through scheduled daily journaling, dictation, etc. Along with this we can also see waves of people resolving to start journaling on New Year’s, feel positive for a month or so, then slowly lose the short-term motivation and try hopelessly to claw for it back after they felt the starting inklings of the long-term benefits but cannot commit to it and the guilt builds up until they lose the habit entirely and flinch when someone mentions it.

Overall even if someone is not religious, a good amount of common religious practices likely have direct positive effects, purely because they did not get selected out of society’s collective pool of ideas.

how i learned to stop becoming angry and love the ragebaiters

Unlike most generic 2025 slang, the trend of “ragebait” has actually been very personally helpful for me, leading to a significant decrease in my tendency to get angry at things. For any life event which would potentially lead me to become angry, I force myself to recognize it as purely ragebait, all it can be is a event which already happened, and why should I be emotionally affected by simple ragebait? This works extremely well, highly recommend.

If you’re personally following along, an explicit phrasing of a thought to force might be useful:

"literally x's only goal is to make me angry, it is life's weak attempt at ragebait, it is the sparrow and i am the locked in bird."

(where x can be any abstract concept, online post, unfortunate life event, or a stranger you’ve met.)

from the perspective of a pure land buddhist

The concept of “ragebait” has actually been around for longer than 2024, like the 2000–early 2010s usage of “don’t feed the trolls” in internet culture... religions somehow premoved them by multiple thousand years.

Anger in Buddhism (from the pure land/mahayana sect) is viewed an anchor to physical reality to be overcome that fixes one into the cycle of reincarnation. Further, it is a temporary feeling that can be minimized through tranquility and insight gained through meditation; it’s purely driven by your brain trying to subconsciously keep you reincarnating. These are grouped into a larger category of “five poisons” along with

- ignorance (rating: 7/10 very easy to fall into)

- attachment (rating: 10/10 help i accidentally created a self)

- pride (rating: 9/10 help i accidentally created a persona)

- envy (rating: 3/10 this is actually pretty easy to avoid)

. Other sentient beings might even try to cause these in you due to their own poisons, but at the end, it is still your choice to accept them into your own brain.

Maybe it would be useful to think of other types of “x-bait” as analogous to ragebait? i.e If you see someone flaunting their wealth and status, the correct action is to identify this as “envybait” and clown on them. Similarily if you achieved some hard-to-get commonly desired task but don’t want people to hate you for it, view it as “pridebait” and never talk about it.

“Attachmentbait” is harder to use, since the purely Buddhist interpretation of attachment is likely too broad for non-Buddhists not seeking to leave physical reality to find value in, and includes ideas such as sentimentality, love, and the like. This said, a useful definition might limit this to material goods and attempts to make people feel these emotions where they’re not deserved, i.e. a job advertising itself as your family or hallmark cards as a stand-in for an actual letter.

“Ignorancebait” is easy to identify; it is brainrot.

a love letter to arcaea

11/27/2025

arcaea is basically the stereotypical indie vertically scrolling artcore/electronica mobile rhythm game with a story that's likely a metaphor for depression. tldr: moneygrab mobile game changes worldview.

I was first introduced back in 2024 and I remember dismissing it as "projectively transformed piano tiles"?? yeah, I was completely clueless towards rhythm games at all. I think I only started playing it in like may 2025, then I unexpectedly met in the wild two Arcaea players over the summer. I was shown completely terrifying charts where it would suddenly gain two extra lanes and become a six-finger chart, or requiring me to cross hands and arms which completely broke my brain trying to read it

Also they showed me bits and pieces of the story (just eternal core and luminous sky) which immediately captivated me further. massive spoilers:

last warning of spoilers upon clicking of this text.. please read the story for oneself if one has the chance

Hikari is exposed to shards of glass containing positive memories, slowly collecting positive memories of happiness, but this turns out to be an excess. Eventually this leads to her eventual numbness to all feeling and experience. At this point a new partner (hikari zero) is unlocked which sets your HP bar to zero if you miss a single note, representing her loss of her own conscious thought. One is then forced to effectively full-combo a song to unlock the rest of the story. how interesting.

During this time I also started playing sekai and re-fell into my long forgotten love for vocaloid songs after getting over my self-imposed view that they were "chronically online degen" in some sense?? yeah, denying yourself from having fun to appease some nonexistent societal standard is not worth it.

Arcaea was one of the first games where I could actually feel myself improving and by god was it a motivating feeling. Playing this rhythm game in some way rewired my brain to hit notes. The only other rhythm game I played before this was osu!, which is honestly more about physical ability and accuracy than this rewiring of brain, and I never really felt the same effect as quickly as when playing Arcaea, where all the notes were easily physically hittable. Then after this I started actually getting the "rhythm" part of it where playing some songs basically felt like flying with the music. Later I started intentionally spacing out and stop consciously thinking in order to hit notes and patterns "by instinct".

unfounded thought on generative AI and subliminal messaging

09/03/2025

My uncomfortablity with generative AI output is decently correlated with the "dimensionality" of the output type.

i.e. I'm mostly fine with using generative AI for things such as LaTeX generation, html formatting, etc. Using it for text generation is slightly unnerving, and for images much more so.

Am I just very susceptible to the uncanny valley effect? Or maybe is it easier to "completely understand" low-dimensional output?

Or maybe is dimensionality correlated with original thought, and the real reason AI output makes me instinctually uncomfortable is because of its lack of original thought?

As of today, many AI language models have a bias to reach a spiritual attractor state when you refeed its text output back into itself, which is decent evidence of subliminal messaging being possible through text, at least. With image generators, refeeding images is known to gradually exaggerate certain features and become gradually more yellow over time. However, low dimensionality output, there is no real way for messages and influences like this to take hold, as the reader is able to perceive all of it completely.

So maybe the best practice of "mental hygiene" when dealing with AI output is to treat any high-dimensional output as a cognitohazard and try to avoid being exposed to it excessively. Low-dimensional output should likely not have this hypothetical effect, maybe?.

using AI in conversations also ruins the proof of work inherent in human responses

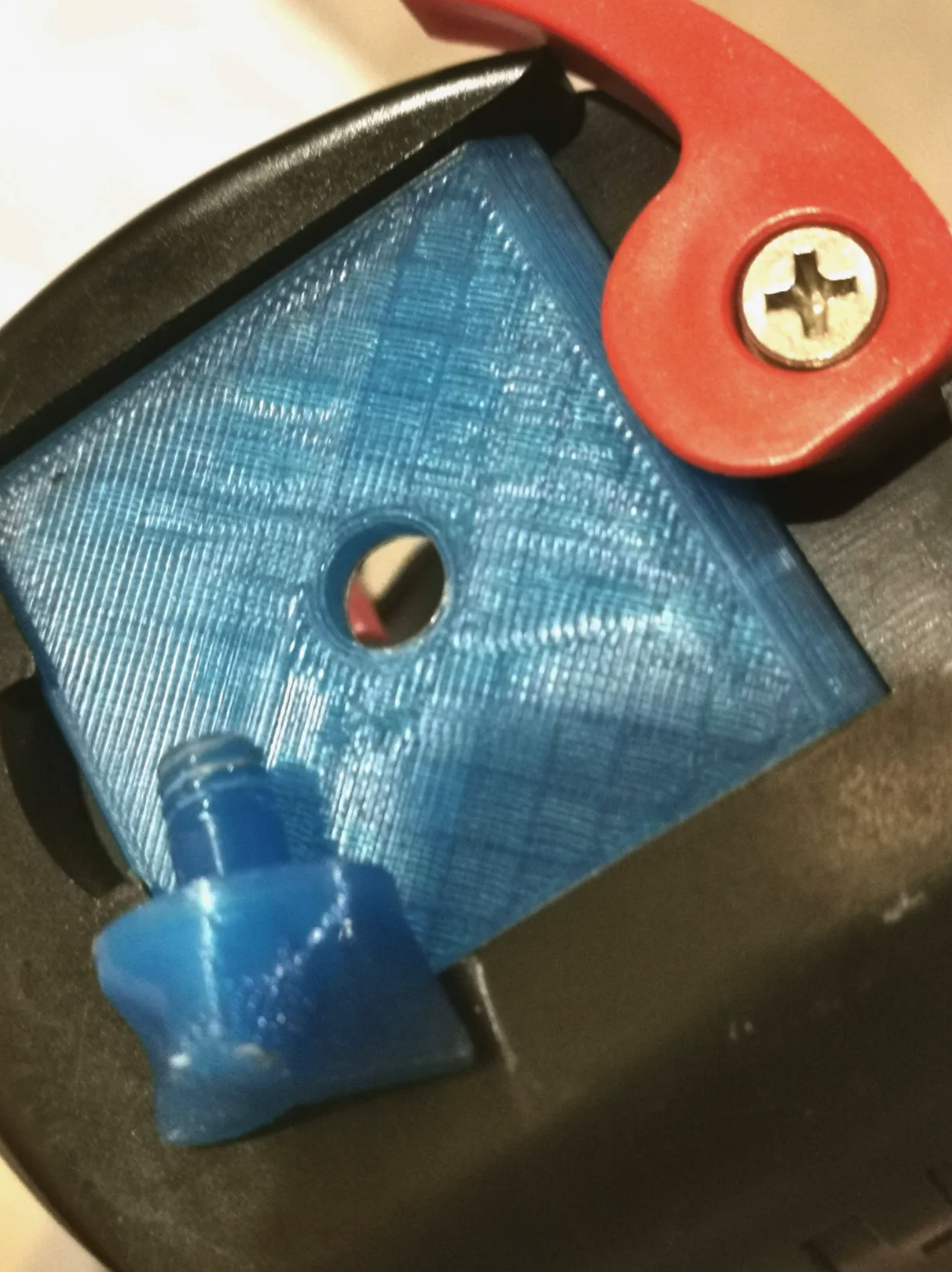

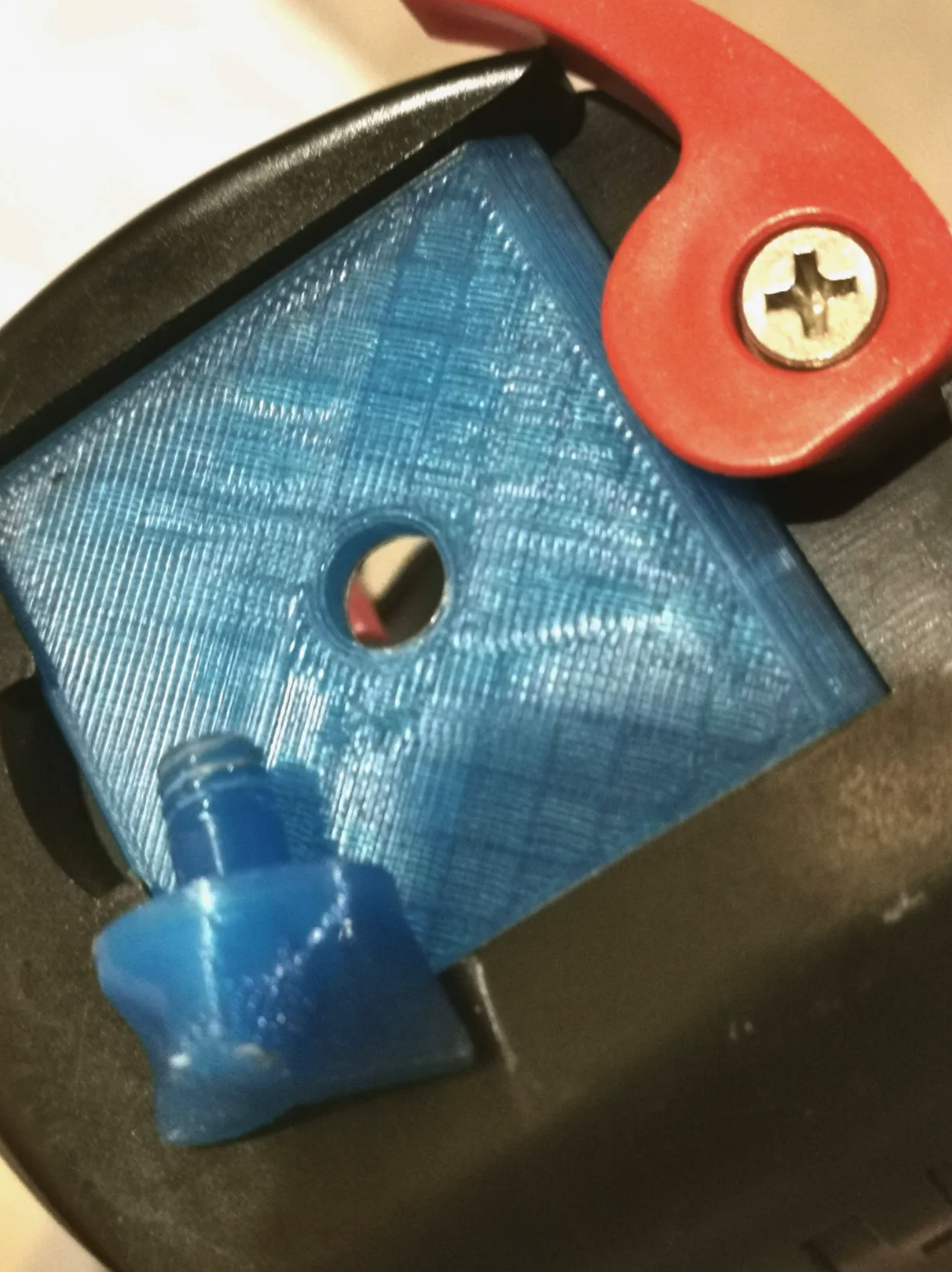

struggles with a 3d printer

07/24/2025

3D printer completely destroyed its extruder by a unattended failed print causing the extruder to extrude a large glob of solid plastic on the nozzle. Even after gentle heating, removing the plastic ended up taking the thermistor out with it, so exasperatedly I decided the best thing to do was just replace the whole hot-end.

After spending a few days tuning leveling and extrusion, the printer was able to print for the first time in months. Currently, I used it to print a part for adapting a 1/4 inch standard camera thread onto a broken tripod I had. Hopefully this will somewhat improve the photos on this website, lol.

stl file for the part, if you want: https://files.catbox.moe/ur2x0e.stl

The printed part for camera attachment